Events

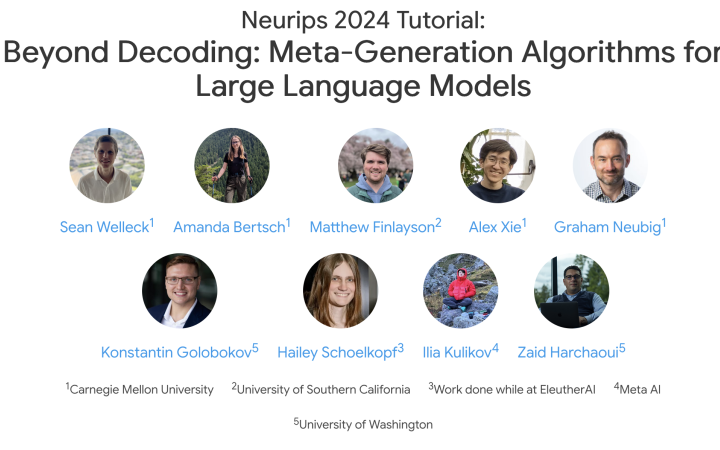

Neurips 2024 Tutorial: Beyond Decoding: Meta-Generation Algorithms for Large Language Models

-NeurIPS

West Exhibition Hall C

United States

One of the most striking findings in modern research on large language models (LLMs) is that, given a model and dataset of sufficient scale, scaling up compute at training time leads to better final results. However, there is also another lesser-mentioned scaling phenomenon, where adopting more sophisticated methods and/or scaling compute at inference time can result in significantly better output from LLMs. We will present a tutorial on past and present classes of generation algorithms for generating text from autoregressive LLMs, ranging from greedy decoding to sophisticated meta-generation algorithms used to power compound AI systems. We place a special emphasis on techniques for making these algorithms efficient, both in terms of token costs and generation speed. Our tutorial unifies perspectives from three research communities: traditional natural language processing, modern LLMs, and machine learning systems. In turn, we aim to make attendees aware of (meta-)generation algorithms as a promising direction for improving quality, increasing diversity, and enabling resource-constrained research on LLMs.

Full details, schedule and reading list at: https://cmu-l3.github.io/neurips2024-inference-tutorial/

Sean Welleck, Carnegie Mellon University

Amanda Bertsch, Carnegie Mellon University

Matthew Finlayson, USC

Alex Xie, Carnegie Mellon University

Graham Neubig, Carnegie Mellon University

Konstantin Golobokov, University of Washington

Hailey Schoelkopf, EleutherAI

Ilia Kulikov, Meta AI

Zaid Harchaoui, University of Washington