Events

IFML Seminar

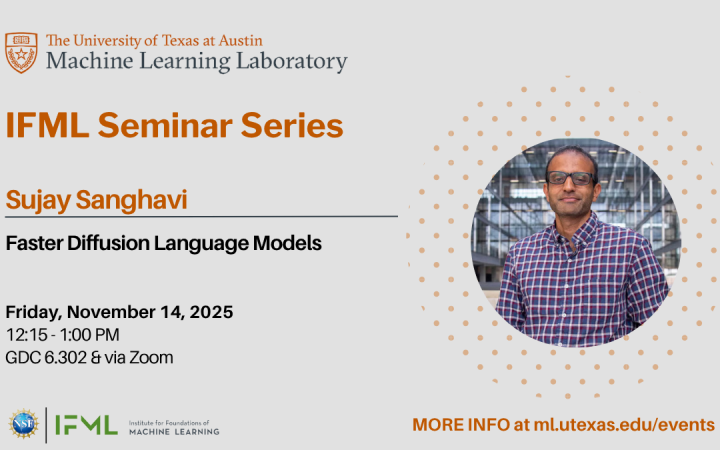

IFML Seminar: 11/14/25 - Faster Diffusion Language Models

Sujay Sanghavi, Bettie Margaret Smith Professor, Chandra Family Department of Electrical and Computer Engineering, UT Austin

-The University of Texas at Austin

Gates Dell Complex (GDC 6.302)

2317 Speedway

Austin, TX 78712

United States

Abstract: Diffusion language models (DLMs) represent a nascent but promising alternative to GPT-style autoregressive (AR) language models: as opposed to generating one token at a time left to right, DLMs start from a set of noise tokens which they iteratively refine into text. The any-order generation can potentially result in more consistent text, while parallel generation can potentially be faster. In practice however, parallel generation results in big drops in output quality, and DLMs currently generally do not match AR models except if used in one-token-at-a-time mode.

In this talk we identify two issues with current DLMs: (a) parallel generation samples from product marginals instead of the true joint distribution of tokens, and (b) early errors are the primary cause of drops in accuracy. We then develop a new architecture for better sampling, and also a new self-training process, to significantly fix these issues.

No prior knowledge of DLMs is assumed.

This talk presents joint work with Parikshit Bansal (joint sampling) and Huaisheng Zhu (self-training)

Bio: Sujay is the Bettie Margaret Smith Professor of ECE at UT Austin, where he conducts research on machine learning with a talented group of students. He is the director of the NSF Tripods Institute, and established the Amazon Science Hub, at UT Austin. He is also currently an Amazon Scholar and has been a Principal Research Scientist at Amazon.

Zoom link: https://utexas.zoom.us/j/84254847215