New IFML Framework for Diffusion Models to be included in several production pipelines by teams in Google

Algorithms for solving inverse problems give breakthrough performance on visual stylization, personalization, and image restoration.

Latent representation spaces have been powerful in enabling computationally efficient methods for image generation. Indeed, state-of-the-art generative AI techniques today (e.g., Stable Diffusion) crucially leverage lower-dimensional representation spaces to enable text-prompted image generation. In recent work, we showed that such representation spaces also enable us to solve a wide variety of inverse problems. An inverse problem is one where partial data of the ground truth is available and the goal is to reconstruct the missing data (e.g., for images, this includes inpainting, denoising, deblurring, de-striping, and super resolution). In collaboration with Google and Google DeepMind researchers, we developed state-of-the-art algorithms for image editing, restoration, and personalization.

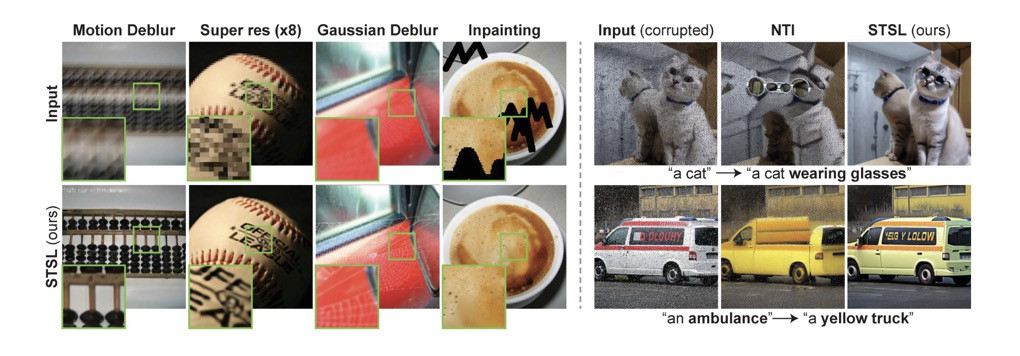

In [1], we previously developed the first framework to solve linear inverse problems, leveraging pretrained latent diffusion models (a class of models that build on diffusion-based Markov processes for sampling from high-dimensional distributions). Formally, these methods rely on posterior sampling in latent space for image recovery; however, they are known to have a quality-limiting bias in the diffusion that leads to blurry images. To address this issue, we developed Second-order Tweedie sampler from Surrogate Loss (STSL) [2], the first efficient second-order diffusion sampler to mitigate the bias incurred in the widely used first-order samplers. With this method, we devised a surrogate loss function to refine the reverse process at every diffusion step to address inverse problems and perform high-fidelity text-guided image editing. This work has already had major impact. Teams at Google are currently incorporating STSL into next-generation devices and numerous production pipelines, including Pixel, Chromebook, Tablet, and YouTube, aiming to enable face authentication to the broader ecosystem.

(See: https://stsl-inverse-edit.github.io for more results).

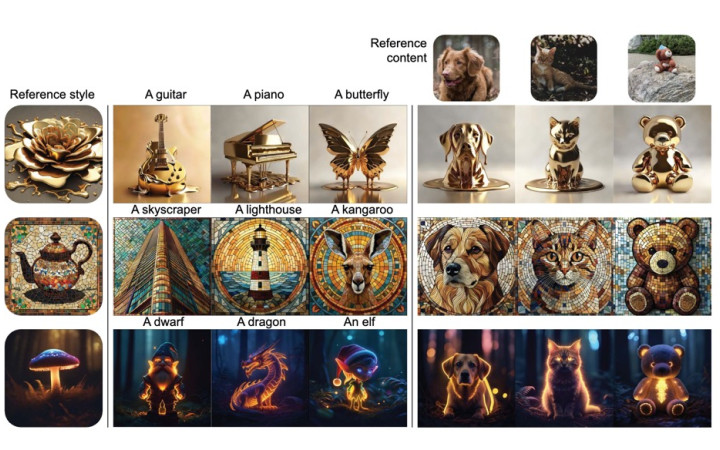

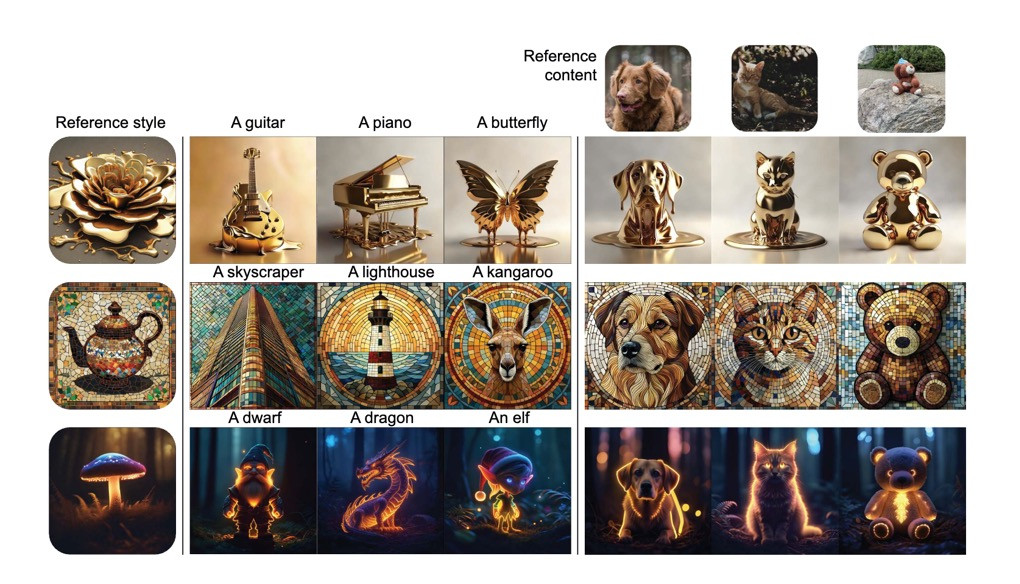

In [3], we developed a new framework called RB-Modulation that enables time-sensitive applications such as personalization on mobile devices (e.g., changing the style of an image based on a reference style or composing a reference style with given content). Specifically, our approach results in more than 20x improvement in speed compared to training-based methods, while outperforming previous state-of-the-art approaches in training-free personalization tasks. Teams at Google are incorporating RB-Modulation into the production pipelines of Pixel and YouTube.

Figure 2 presents qualitative results for stylization and content-style composition. See more examples at: https://rb-modulation.github.io/). We have also released the GitHub source code and a demo on Hugging Face, which became the number one demo of the week on Hugging Face Spaces with more than 18,000 users.

References

[1] Rout, Litu, Negin Raoof, Giannis Daras, Constantine Caramanis, Alex Dimakis, and Sanjay Shakkottai. "Solving linear inverse problems provably via posterior sampling with latent diffusion models." Advances in Neural Information Processing Systems 36 (2024).

[2] Rout, Litu, Yujia Chen, Abhishek Kumar, Constantine Caramanis, Sanjay Shakkottai, and Wen-Sheng Chu. "Beyond First-Order Tweedie: Solving Inverse Problems using Latent Diffusion." IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) (2023).

[3] Rout, Litu, Yujia Chen, Nataniel Ruiz, Abhishek Kumar, Constantine Caramanis, Sanjay Shakkottai, and Wen-Sheng Chu. "RB-Modulation: Training-Free Personalization of Diffusion Models using Stochastic Optimal Control." arXiv preprint arXiv:2405.17401 (2024).