Superquantile-Based Learning: From Centralized to Federated Learning

The superquantile (or conditional value-at-risk) has found many applications in finance, management science, and operations research in the past but it draws an increasing attention in machine learning, e.g., with safety or fairness constraints. We review some of these new applications of the superquantile, giving pointers to exciting recent developments. These applications reveal nonsmooth superquantile-based objective functions that admit an explicit subgradient calculus. To make these superquantile-based functions amenable to the gradient-based algorithms popular in machine learning, we propose to smooth them by infimal convolution and provide efficient procedures for computing gradients of smooth approximation.

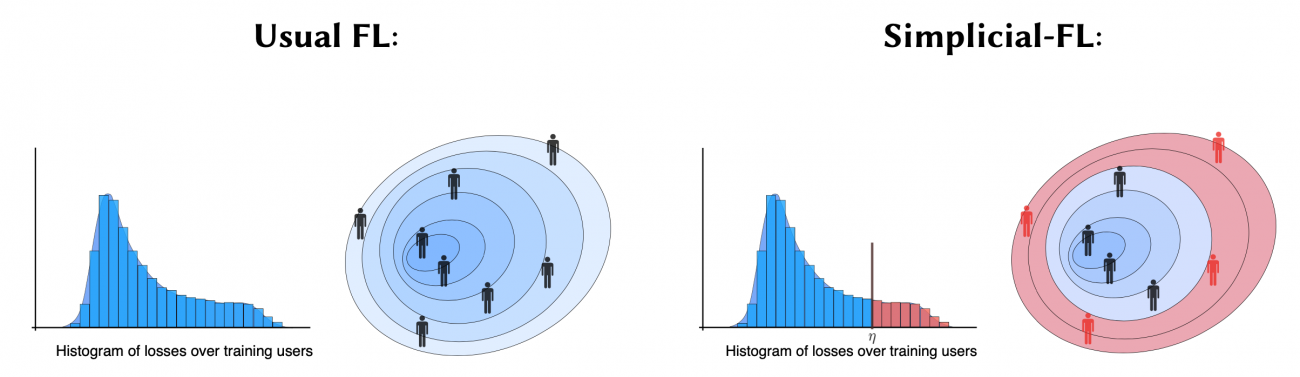

We focus on a specific application of the superquantile to federated learning, by developing a federated learning framework called Simplicial-FL that allows one to handle heterogeneous client devices that may not conform to the population data distribution. The proposed approach hinges upon a parameterized superquantile-based objective, where the parameter ranges over levels of conformity. We introduce a stochastic optimization algorithm compatible with secure aggregation, which interleaves device filtering steps with federated averaging steps. We conclude with numerical experiments with neural networks on computer vision and natural language processing data.

Results

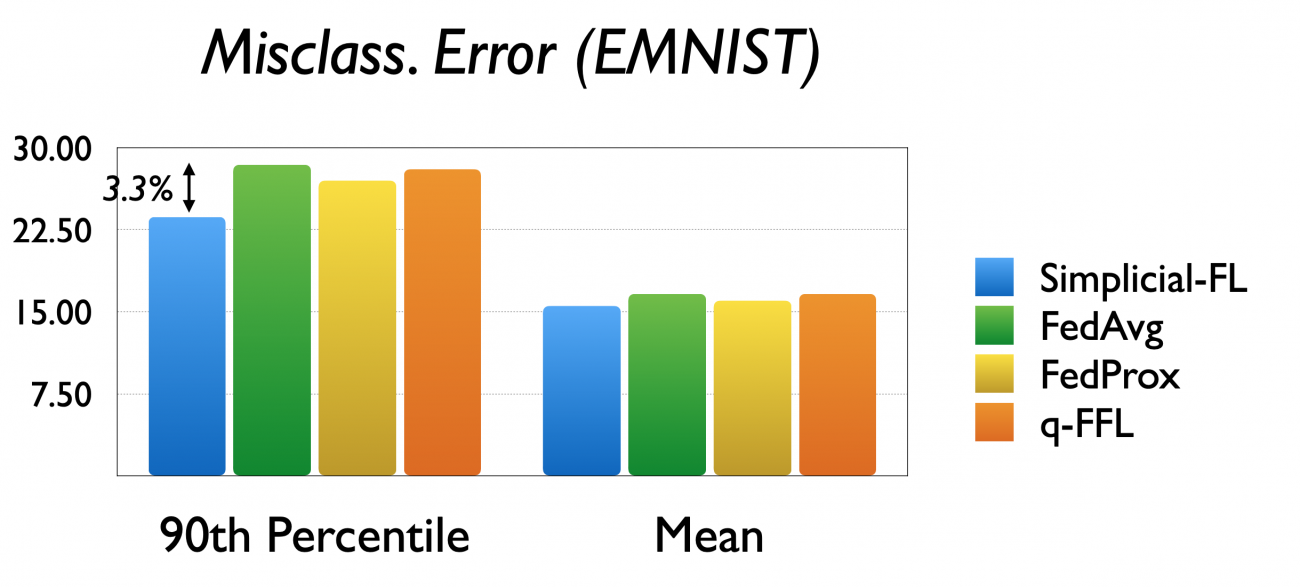

In the federated setting, Simplicial-FL achieves on image and text benchmarks

- the smallest 90th percentile of the misclassification error on unseen test devices when compared to other proposed methods, and,

- competitive performance on the mean of the misclassification error on the test devices.

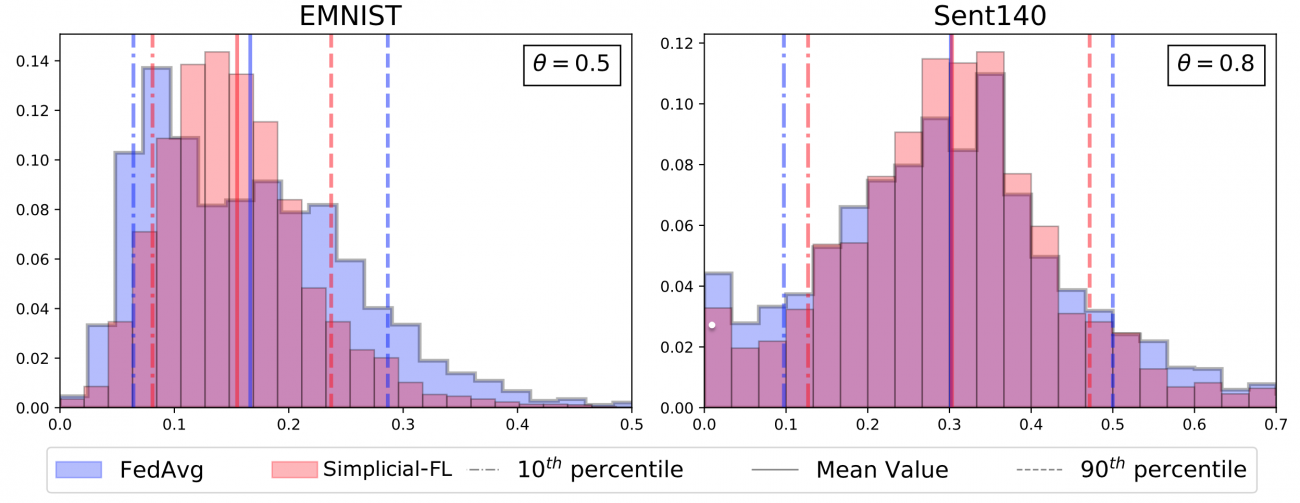

In addition, Simplicial-FL (in red) promotes equity among the distribution of misclassification errors across test devices, with a smaller 90th percentile.

References

[1] Laguel, Y., Pillutla, K., Malick, J., and Harchaoui, Z., 2021. A Superquantile Approach to Federated Learning with Heterogeneous Devices. In CISS 2021. (Bibtex)

[2] Laguel, Y., Pillutla, K., Malick, J., and Harchaoui, Z., 2021. Superquantiles at Work: Machine Learning Applications and Efficient (Sub)gradient Computation. In review.

Acknowledgments

We acknowledge support from NSF DMS 2023166, DMS 1839371, CCF 2019844, the CIFAR program “Learning in Machines and Brains”, faculty research awards, and a JP Morgan PhD fellowship. This work has been partially supported by MIAI – Grenoble Alpes, (ANR-19-P3IA-0003).